As AI tools like ChatGPT become deeply woven into everyday life, an increasing number of people -especially younger users, are turning to the chatbot for advice on relationships, mental health, and life decisions. So much so, that little incovinience in life and young adults turn to chatgpt because they feel heard. However, Open AI CEO Sam Altman has issued a serious warning about relying too heavily on AI for personal matters, particularly when it comes to privacy and decision-making.

Conversations with ChatGPT are not confidential

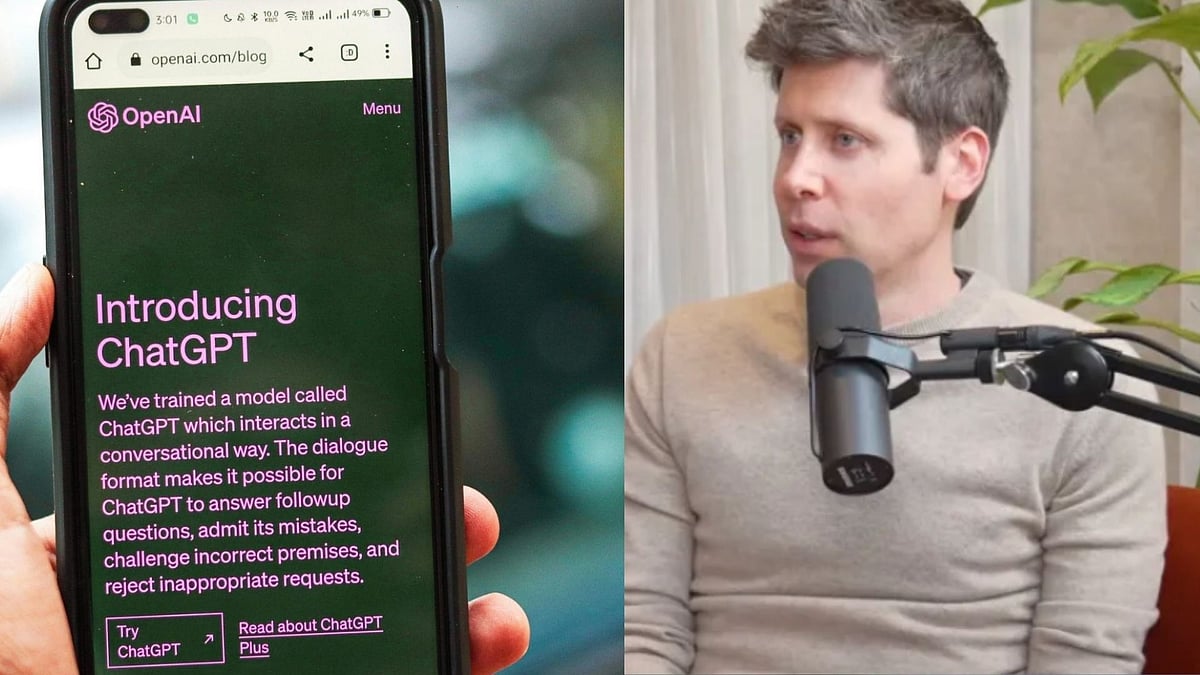

In a recent appearance on the This Past Weekend podcast hosted by comedian Theo Von, Sam Altman opened up about a growing concern: ChatGPT conversations are not legally protected like those with therapists, doctors, or lawyers. “Right now, if you talk to a therapist or a lawyer or a doctor about those problems, there's legal privilege for it. There's confidentiality. We haven’t figured that out yet for ChatGPT,” Altman explained.

This means that anything shared with the chatbot-no matter how personal or sensitive, could be accessed or even used in court if required. Unlike traditional counseling sessions, where your conversations are protected by law, AI interactions currently lack the same legal safeguards.

Over-dependence on AI for life advice raises ethical flags

Altman also highlighted a troubling trend: users, particularly Gen Z, are becoming overly dependent on ChatGPT for making personal decisions. Speaking at a Federal Reserve banking conference, he noted that some individuals treat ChatGPT as their main life advisor. “There are young people who say, ‘I can’t make any decision in my life without telling ChatGPT everything that’s going on. It knows me. I’m going to do whatever it says.’ That feels really bad to me, " he said.

While ChatGPT is designed to offer helpful, informed responses, Altman believes there is something inherently wrong with people outsourcing all critical thinking to an AI system. He emphasised that blind reliance on AI, even if the advice seems good- is not a healthy or sustainable path forward.

A privacy gap that could have real consequences

The lack of legal protections for AI-based interactions could lead to serious complications in the future. Altman warned that if private exchanges with ChatGPT end up in legal proceedings, OpenAI may be legally obligated to hand them over. “If someone confides their most personal issues to ChatGPT and that ends up in a courtroom, we could be compelled to hand that over. That’s a real problem,” he said.

He added that this privacy gap didn’t even exist as a public concern just a year ago, but it’s now becoming a pressing issue as more people begin using AI for deeply personal reasons.

ChatGPT can be a tool- Not a therapist

Although ChatGPT may offer insight, emotional clarity, or practical suggestions, it is not a substitute for professional care. A licensed therapist brings human empathy, legal confidentiality, and clinical training that AI simply cannot replicate.

Altman admitted that while ChatGPT may sometimes deliver advice better than humans, it lacks context, empathy, and responsibility. It also cannot build a truly trusting or safe environment for mental health support.

How to use AI responsibly?

Here’s how users can make the most of ChatGPT without falling into the trap of over-dependence:

Use it for organising thoughts or brainstorming.

Ask for general guidance but verify with human experts.

Avoid sharing sensitive, legal, or confidential information.

Don’t treat it as a replacement for therapy or clinical advice.

Seek help from mental health professionals for serious emotional concerns.